Variable selection

The goal of this article is to gathering thoughts about variable selection.

Consensus features nested cross-validation

Bioinformatics, Volume 36, Issue 10, 15 May 2020, Pages 3093–3098

Background

Feature selection

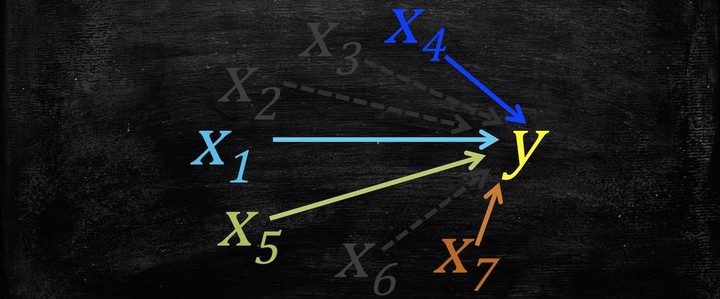

There are multiple ways to use feature selection with classification to address the bias-variance tradeoff. Wrapper methods train prediction models on subsets of features using a search strategy to find the best set of features and best model (Guyon and Elisseeff, 2003). Optimal wrapper search strategies can be computationally intensive and so greedy methods, such as forward or backward selection are often used. Embedded methods incorporate feature selection into the modeling algorithm. For example, least absolute shrinkage and selection operator (Lasso) (Tibshirani, 1997) and the more general Elastic Net (or glmnet as it is labeled in the R package) (Zou and Hastie, 2005), optimize a regression model with penalty terms that shrink regression coefficients as they find the best model. The Elastic-Net hyperparameters are tuned by cross-validation (CV).

Cross validation

CV is another fundamental operation in machine learning that splits data into training and testing sets to estimate the generalization accuracy of a classifier for a given dataset (Kohavi, 1995; Molinaro et al., 2005). It has been extended in multiple ways to incorporate feature selection and parameter tuning. A few of the ways CV has been implemented include leave-one-out CV (Stone, 1974), k-fold CV (Bengio et al., 2003), repeated double CV (Filzmoser et al., 2009; Simon et al., 2003) and nested CV (nCV) (Parvandeh and McKinney, 2019; Varma and Simon, 2006).

nCV

nCV is an effective way to incorporate feature selection and machine-learning parameter tuning to train an optimal prediction model. In the standard nCV approach, data is split into k outer folds and then inner folds are created in each outer training set to select features, tune parameters and train models. Using an inner nest limits the leaking of information between outer folds that feature selection can cause, and consequently the inner nest prevents biased outer-fold CV error estimates for independent data. However, standard nCV is computationally intense due to the number of classifiers that must be trained in the inner nests (Parvandeh et al., 2019; Varoquaux et al., 2017; Wetherill et al., 2019). In addition, we show that nCV may choose an excess of irrelevant features, which could affect biological interpretation of models.